- in 2015 I went to a tech meetup that Dropbox were holding in a Sydney laneway, and I learned about an Aussie startup called Canva. I introduced myself to founder Cliff, did some research over the next week, and decided to talk him into hiring me. Two months later I was working there. It may well have been the most significant meetup of the hundreds I’ve been to over the years!

- in 2009 the Sydney CBD experienced a major power outage. It was a turning point in my relationship with Twitter, as the locals were having a lot of fun positing that zombies were running amok in the city. I jumped in and never looked back. (Well, until the end of 2022.)

- in 2007 Kim and Kelley Deal came into the knitting shop where I worked, and I didn’t even recognise them at first! Thank goodness my crappy mobile phone had a camera on it.

- in 2003 I knitted a bikini. No, I never wore it. Knitted bikinis look flattering on approximately 0.2% of the population.

Category: Meta

-

Highlights from the w-g archives

-

Facebook Import

As I did with Instagram and Twitter, I’ve spent the last couple of days importing all of my posts from Facebook to this blog. Similar to those projects, I requested my archive in JSON format and then used an Apple Shortcut to parse it and upload via the WordPress API. The Shortcut is very similar to the one I used for Instagram, but with a few more edge cases and IF statements since FB allows for more post types than just images. (I’m not going to bother sharing it. If you were clever enough to follow the other two Shortcuts, you can figure it out.)

My earliest post was from 2007, and all together I had 4,083 days worth of posts to import. I only synced images to WordPress; I haven’t touched any videos yet (but I never really uploaded many of those to FB). It took me just short of 29 hours spaced out over the course of a week, not counting the time I spent manually reviewing and cleaning things up. (I deliberately slow down the API requests to avoid DDoSing my own site.)

And it bears repeating: Facebook’s data archive sucks. A brief list of problems I encountered:

- Blank status updates. This happened a lot more in the older data.

- Missing data when I “shared a Page/post/photo/link/video/event” from Facebook itself. This happened a lot more in the older data.

- Missing data when posting from other sites/apps, like Eventbrite, Foursquare, Tweetdeck, Spotify, Meetup, Runkeeper, etc. This happened a lot more in the older data.

- Duplicated content – there would be a “Kris Howard shared a link” item with a URL, and then a matching status update where I actually shared the URL. This happened a lot more with the data in recent years.

- URLs that I’m fairly certain I shared in comments on posts, but included as top-level items with zero context. This happened exclusively with data from the past couple years.

- Inconsistent links to FB users – most of the time when I tagged someone, their name would appear like this in the data: “Hey @[1108218380:2048:Rodd Snook]”. But then in recent years, that format disappeared.

- Dead links – not Facebook’s fault, but there are so, so many.

As soon as these errors started cropping up, I had to make the call whether to stop and adjust my Shortcut to handle them, or to clean them up manually. In most cases, I decided that I’d just manually review and fix. After every couple months’ worth of import, I’d pause and page through them on the site to see if any looked weird. I’d then manually edit and tidy up any issues.

There were other oddities I noticed in the data that aren’t really errors. For example, my earliest status updates are all sentence fragments that start with a verb. This is because back in the aughts Facebook had an explicit “What are you doing right now?” prompt. Kinda funny.

The archive also included posts that I made on other people’s profiles, mostly just “Happy birthday” wishes. The data does include the name of the person I was writing to, but I couldn’t be arsed creating a special case in my Shortcut to handle that. I ended up deleting most of those and just keeping the ones that amused me or where it was a family member.

The archive didn’t include posts that I made in Groups. That may have been an option when I downloaded my archive, but I decided it wasn’t worth the effort. I’ve never been a big Group user. It also doesn’t include the comments on any of my posts. Again, that may have been an option, but I figure discussions should be ephemeral. I’m okay with not having those.

Ultimately you could argue that this import had minimal value. Most of the content is actually already on this blog, either posted natively or included already in the Twitter or Instagram imports. But there are occasional gems in there that I didn’t post anywhere else, and I’m happy I preserved those. I don’t expect anyone to ever read them, but it’s an important part of my personal data archive and I’m glad I have it.

And now I just need to finish deleting all the content over on FB…

-

Scrobbling and it feels so good

I was cleaning up old blog posts today when I saw a mention of the widget I used to have (decades ago) that showed what I was listening to. “That would be fun to recreate,” I thought. How hard could it be?

Folks, I could find no way to easily embed my last played Apple Music song. The desktop app allows you to get iframe embed code for playlists, but there’s no playlist for your Recently Played. You can create a Smart Playlist of songs you’ve recently played, but this will only include songs in your library and not songs you’ve streamed. Also, you can’t embed a Smart Playlist anyway. There are no WordPress plugins that do this, and no third party apps that I could find. There is a Developer API for Apple Music, but to register as a developer you have to pay $99 a year. Yeah, no.

For a second I thought about switching back to Spotify. Double-plus no.

Then I remembered… scrobbling. That was a thing, right? Turns out it’s still a thing. But how to scrobble Apple Music? I mostly listen on my iPhone, and the consensus seems to be that Marvis Pro is the way to go. This app is basically a wrapper for Apple Music, but it has a massively customisable UI (the Redditors love it), it scrobbles to last.fm out of the box, and it’s only $15 AUD. I figured it was worth a shot. Installed the app, signed up for last.fm, and verified that scrobbling was happening. Now to hook it up to WordPress…

This post from RxBrad has a handy Javascript snippet that can be used in a WordPress Custom HTML widget. Too easy! I signed up for an API key and set up the script… but it wouldn’t work. In the Block Editor preview it would show the album, but when I published the widget, it would just show a broken image on the site. I noticed in the console that there were some errors about the ampersands, and I could see that WordPress was actually converting them to HTML entities. I banged my head on a wall for 10 minutes until the Snook woke up from his nap and I patiently explained the problem. Less than 2 minutes later he had solved it. Oh right! We used to always put HTML comment tags around our Javascript, back in the day. (Insert “Do not cite the Deep Magic to me, Witch” meme.) Once I added those, everything just worked!

So yeah, there it is over in the sidebar on the homepage.

Limitations:

- Marvis Pro only scrobbles while the app is open. So if my iPhone screen goes to sleep, it won’t sync again until I wake it up. It does sync the whole history then, but it does mean the sidebar isn’t really necessarily “live.” Do I care? Not at this point. If I do, I can apparently pay $10 to unlock background scrobbling via last.fm Pro.

- Marvis Pro doesn’t have a desktop app. If I want to scrobble from my Mac Mini, I’ll need to setup the last.fm desktop app. Can’t be arsed right now, but it’s an option.

-

Facebook Import frustrations

Yesterday I kicked off the long gestating project to import all my old Facebook content to this website. I requested my archive several months ago, and since then I’ve been working on deleting all my content there. (It’s such a pain to delete your content without deleting the account. I’ll write up a post about that later.) Anyway, the import is now happening and you can see the posts appearing here.

The exported data is a mess though, which has made the import script a real pain. You can post to Facebook from lots of other apps (and I did, over the years), and not all of the data is in there in the same ways. There are so many cases where data is just missing from the JSON. Like, you can post to Facebook from Eventbrite, and all that’s in the export is me saying “Booked in!” and “Kris Howard posted something via Eventbrite.” but the actual event isn’t linked or listed at all. It’s just gone. I’ve found other examples too, like Instagram. (Fortunately I already imported all my Instagram posts, so I’m just skipping over those.) But I’m only 10% of the way through, and I’m doing a lot of manual cleanup work.

On the upside, this project (as well as my Instagram and Twitter import) have taught me so much about archiving and data portability. I’m happy that I will have some sort of record of this data, even if it’s not 100% complete. I’ll never end up in this situation again, and hopefully I can help a few others realise the pitfalls of entrusting your data to corporations.

-

Nine years ago…

I was doubtful whether my project to import all my old tweets to my blog had any real value. But through them I just remembered that 9 years ago today, I got to meet and teach knitting to women and children in Manila who had been rescued from human trafficking. Canva supported the organisation and a whole group of employees went. It was one of the most worthwhile things I did in my whole tech career, and it had nothing to do with computers. 😭🩷

-

Of Blog Rolls and RSS

Today I added a Blog Roll to the site, down there in the right-hand column. It’s been a long time since I had one of those! I’ve started with just a baker’s dozen of links for the sites whose posts I always read first when they appear in my RSS reader. I actually subscribe to a lot more than that, including a bunch that I just discovered via Wölfblag and Sky Hulk’s blog rolls. I’m sure some of those will make it onto the list in the coming months.

Relatedly, I moved from Feedly to NetNewsWire earlier this year on both my Mac and my iPhone. Feedly was increasingly feeling like LinkedIn, and I resented that they kept trying to cram AI into an RSS Reader. It was very easy to click on the wrong link and end up on a Company Profile Market Intelligence page, which annoyed me. No, thank you! NetNewsWire is just what I need, and it was super easy to export and import all my subscriptions over to it.

That reminds me – as part of this Blog Renaissance™️ – I’ve noticed a lot of sites don’t have RSS feeds. People… don’t you want us to read your stuff? You have to have an RSS feed. It’s table stakes.

-

A challenge of blog questions

Blogging is back, baby! We’re even doing the thing where we write a post and then tag other people to answer the same questions. I haven’t done one of these in decades. (Link courtesy of Ethan Marcotte.)

Why did you start blogging in the first place?

Because it was 2000 and I was working as a web developer, and clearly everything we were doing was CREATING THE FUTURE. I initially started a group blog with a couple college friends (but really it was 99% me) writing about stuff happening back at our university dorm, because I was in that phase of life right after leaving college where you still think it was the most important thing ever. (I’ve since imported all those old posts over here.) A few months later I started my personal blog, which has been running continuously for 24 years. I was living overseas in London and it became a way to share my life with my family and friends back in the States. The blog quickly became the nexus of my social life, and there was a core group of early bloggers that I exchanged links and comments and mix CDs with for several years. I really miss those days.

What platform are you using to manage your blog and why did you choose it? Have you blogged on other platforms before?

I started with Blogger.com, which back then was basically a static site templating engine where it would actually compile HTML and FTP it to your hosting provider. It suffered from constant outages though, and within a year I was writing my own simple PHP+MySQL blogging CMS. I switched over to that in May 2001, and I released the code a couple weeks later. I used that system for fourteen years, adding lots of little features here and there. Eventually I got tired of constantly being hacked – and not having the skills to prevent it – and my friend John Allsopp made a radical suggestion: move to WordPress. I imported over 13K+ posts and 25K+ comments, and set to work learning everything I could about how to lock it down. I’ve been using WP ever since, on various hosting providers (most recently Amazon Lightsail).

Every now and then I get annoyed with WordPress – it’s taken me years to come around to using the block editor, which I will still only do under great duress – and Rodd grumbles every time I need him to help me debug something. But mostly it just works, and it does everything I need it to.

How do you write your posts? For example, in a local editing tool, or in a panel/dashboard that’s part of your blog?

Mostly just in the WordPress (classic) editor, in a browser window. I’ve tried using the WordPress app on my phone, but I find it annoying. (Again: block editor.) A few months ago I had the idea to create some iOS Shortcuts that allow me to create photo posts straight from the Photos app on my iPhone. That’s been super useful and fun.

When do you feel most inspired to write?

In the early days, I shared multiple times a day – every little thought that came into my head. Hey, we didn’t have social media back then, all right? Links I’d found, news that was interesting, even dreams that I’d had. And then in 2009 I got into Twitter and blog posting dropped off accordingly. For like a good decade there the blog was mostly just automated posts that collated what I was sharing on other social networks.

I started making an effort to blog more when we moved to Munich, Germany during the pandemic in August 2020. It was partly getting back to one of my original blog motivations – sharing my life overseas with friends and family – and partly just not having any other social outlets when we were in lockdown. I also knew that we weren’t going to be there for more than a couple years, so the blog became my journal documenting an experience that I knew I would never want to forget. (Oh and also a Nazi bought Twitter, so I killed my account there and thus to redirect all that tweeting energy.)

And then last year I retired from full-time work, and suddenly I had more time to think about how I interact with technology. I’ve been incensed to see how Big Tech leaders that I formerly semi-respected have turned into craven bootlickers for the new administration. It’s galvanised me to take control of my data, and to focus on my website as the center for my online presence. I’ve been importing my content from elsewhere, and I’m experimenting with ways to syndicate my content outwards. (Discussions with my friend David Edgar have been super helpful, as he’s built his own system for doing just that.)

I feel like I’m getting away from the original question though. Nowadays I mostly feel inspired to write in the morning. For the past couple weeks I’ve been making time after breakfast to “tend my digital garden,” going back through all the posts written on this date and fixing broken links, adding tags, and cleaning up dodgy HTML. I find it very soothing and therapeutic, and it often gives me ideas for things I should write about. I also usually have a couple links saved up from my evening browsing that I want to share too.

Do you publish immediately after writing, or do you let it simmer a bit as a draft?

Unless it’s a very long post that takes me a long time to write, I pretty much always publishing immediately after writing.

What’s your favorite post on your blog?

Oh good grief, there are far too many. As I said, I’ve been doing this for 24 years. I can tell you what the Internet’s favourite post(s) are though: the Jamie’s 30 Minute Meals posts. More than a decade ago Rodd and I cooked and blogged 37 meals from that book, and they are still by far the most trafficked posts on the site. 🤷♀️

Any future plans for your blog? Maybe a redesign, a move to another platform, or adding a new feature?

I literally changed my theme like two days ago! I’m pretty happy with it. I just want a clean, responsive blogging theme with a sidebar, like in the old days. I’m still doing tweaks here and there, but mostly I’m happy.

Another thing that changed recently – I turned comments back on. I turned them off a few years ago because very few people used them anymore, and I was tired of dealing with spam. But now that I’m not using Instagram or Facebook or Twitter, I want there to be a way for people to respond to what I’m writing. And I also know that I find it very frustrating to see a great post on someone else’s blog and not be able to start a conversation about it. Let’s all bring back comments!

And lastly, Rodd and I have talked a lot in recent years about whether I should turn this into a static site. The idea is that I’d still use WordPress as the CMS for convenience – probably running locally on our home server – and then generate static HTML that I host elsewhere. It would be more secure, faster for users, and cheaper for me to host. I’ve played around a bit with WP2Static, and Rodd’s experimented with writing his own site generator in Golang. BUT there’s a big drawback – I’d lose the interactive elements of the site, like searching and commenting. I’d have to find replacements for those, and I’m not a huge fan of my options there. Still thinking about it…

Who’s next?

Most of my old blogging friends have closed up shop a long time ago. I’ll go with some IRL friends that are blogging these days: David, Sathyajith, VirtualWolf.

-

Tending to my digital garden

I’ve been thinking a lot about link rot lately, and how many of the links I have shared on my blog over the years are now dead. I also have a lot of old posts that are missing titles, categories, and tags. Therefore I’ve taken a cue from Terence Eden and I’ve started a project to “tend to my digital garden” every day. I installed the On This Day plugin, and now I have an archive page that shows all the posts from this day across all the years of this site. Every day I’m going to do my best to click through those posts, checking for broken links and updating them as best I can. I’ve already had to resort to the Internet Archive several times, but here’s one bit of good news – the Sydney Morning Herald still maintains links going back 20+ years! That was a happy surprise.

-

Twitter Import via Apple Shortcut

I have successfully imported my entire Twitter history to this blog! Each day’s tweets are grouped into a single post, published on the correct day. You can see all of the tweets here. I started with a JSON file with 3.4 million(!) lines and 52,813 tweets, and ended up with 4,941 WordPress posts (equivalent to 13.5 years) and just over 10,000 images. The full import took me over 40 hours to run. 😳

I have successfully imported my entire Twitter history to this blog! Each day’s tweets are grouped into a single post, published on the correct day. You can see all of the tweets here. I started with a JSON file with 3.4 million(!) lines and 52,813 tweets, and ended up with 4,941 WordPress posts (equivalent to 13.5 years) and just over 10,000 images. The full import took me over 40 hours to run. 😳So how did I do it? I quit Twitter back in 2022 after the Nazi bought it and downloaded all my data at that time. After I did my recent Instagram import using an Apple Shortcut and the WordPress API, I wondered if the same method could be used for my tweet data. Turns out it can! I’ll describe what I did in case you want to do the same.

Caveats: This script only covers importing public tweets (not DMs or anything else), and I didn’t bother with any Likes or Retweet data for each one. I also skipped over .mp4 video files. (I’ve got around 200 and I’m still figuring out how to handle.)

Step 1 – Download your Twitter archive

I can’t actually walk you through this step, as my account is long gone. Hopefully yours is too, and you downloaded your data.

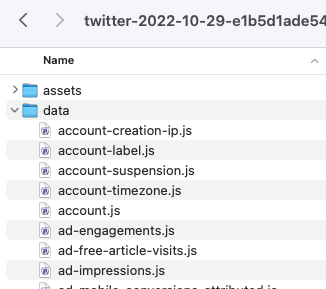

Once I unzipped the file, I found a pretty simple directory structure.

There’s a LOT of random information in there. For my import, I only needed two things: data/tweets.js for the details of the tweets, and data/tweets_media for the images.

Step 2 – Prepare the JSON

I needed to do a bit more work to prepare this JSON for import than I did with Instagram. First, I renamed tweets.js to tweets.json. When I opened it, I immediately noticed this at the very top:

window.YTD.tweets.part0 = [ { "tweet" : { "edit_info" : { "initial" : { "editTweetIds" : [ "1585904318913331200" ],See that “window.YTD.tweets.part0 =” bit? That’s not valid JSON, so I deleted it so the file starts with just [ on the first line. Then I was good to go.

I then took a subset of the JSON and started experimenting with parsing it and importing. I noticed a couple problems right away. The first is how the tweet dates are handled:

"created_at" : "Fri Oct 28 08:00:28 +0000 2022",

WordPress isn’t going to like that. I knew I’d have to somehow turn that text into a date format that WordPress could consume.

The next problem is that each tweet was a standalone item in the JSON. To group them by days, I’d need to loop through and compare each tweet’s date with the one before, or else import each tweet as a standalone post. As a reminder, there were more than 52,000 tweets in my file. Yeah, that sucks. Definitely didn’t want to do that.

The last problem was with media. The Instagram JSON had a simple URI that pointed me exactly to the files for each post, but Twitter doesn’t have that. Eventually I worked out that you’re meant to search the tweets_media folder for images that start with the unique tweet ID. No, really, that’s what you’re meant to do. So I’d need to deal with that.

After discussing it with my husband Rodd, he reckoned that he could use jq to reformat the JSON, fixing the dates and ordering and grouping the tweets by day. (jq is a command line tool that lets you query and manipulate JSON data easily.) After a bit of hacking, he ended up with this little jq program:

jq -c ' [ .[].tweet # Pull out a list of all the tweet objects | . + {"created_ts": (.created_at|strptime("%a %b %d %H:%M:%S %z %Y")|mktime)} # Parse created_at to an epoch timestamp and add it as `created_ts`. | . + {"created_date": (.created_ts|strftime("%Y-%m-%d"))} # Also add the date in YYYY-mm-dd form as `created_date` so we can collate by it later. ] | reduce .[] as $x (null; .[$x|.created_date] += [$x]) # Use `reduce` to collate into a dictionary mapping created_date to a list of tweet objects. | to_entries # Turn that dictionary into an array of objects like {key:<date>, value:[<tweet>, ...]} | sort_by(.key) # Sort them by date | .[] | { # Convert to a stream of objects like {date: <date>, tweets: [<tweet>, ...]} "date": (.key), "tweets": (.value|sort_by(.created_ts)) # Make sure the tweets within each day are sorted chronologically too. } ' > tweets_line_per_day.jsonThat resulted in a new file called tweets_line_per_day.json that looked like this:

Each line is a single day, with an array of all the tweets from that day in order of posting. Because this was still a huge file, I asked him to break it into 50 chunks so I could process just a bit at a time. I also wanted the file extension to be .txt, as that’s what Shortcuts needs.

split --additional-suffix=.txt --suffix-length=2 --numeric-suffixes -n l/50 tweets_line_per_day.json tweet_days_

That resulted in 50 files, each named tweet_days_00.txt, etc. For the final manipulation, Shortcuts wants the stream of objects in an array, so he needed to use jq “slurp mode.” This also had the benefit of expanding the objects out from a single line per day into a pretty, human-readable format.

for FILE in tweet_days_??.txt; do jq -s . < $FILE > $(basename $FILE .txt)_a.txt; done

The end result of all that was a set of 50 files, each named tweet_days_00_a.txt, etc, suitable for parsing by an Apple Shortcut. They each looked like this:

Much better! This, I can work with. I copied these into my Twitter archive directory so I had everything in one place.

Step 3 – Create a media list

Rather than search through all the images in the media file, I created a simple text file that the Shortcut could quickly search as part of its flow. To do this, I opened a terminal window and navigated to the tweets_media folder.

ls > ../tweets_media.txt

That created a simple text file with all the named files in that folder. I also created two more blank files called permalinks.txt and errors.txt that I would use for tracking my progress through the import. I made sure all 3 files were in the top level of my Twitter archive directory.

Step 4 – Set up the Shortcut

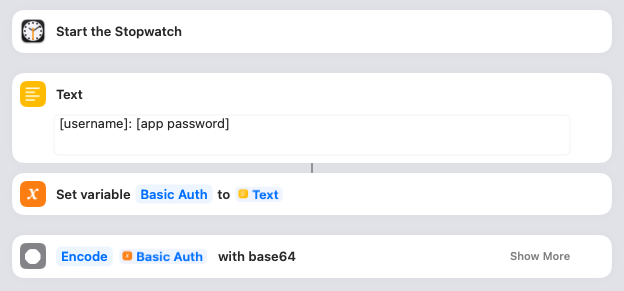

You can download the Shortcut I created here. I’ll walk you through it bit by bit.

I wanted to keep track of how long the import took, so the first thing I did was Start the Stopwatch. You can omit that if you like, or keep it in. More importantly, this is where you give the Shortcut the details it needs to access your WordPress blog. You’ll need to create an Application Password and then put the username and password into the Text box as shown. The Shortcut saves that in a variable and then encodes it.

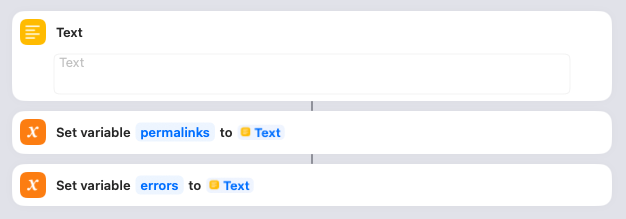

In order to show a list of all the posts that have been created at the end of the import (as well as any errors that have occurred), I create blank variables to collect them. Nothing for you to do here.

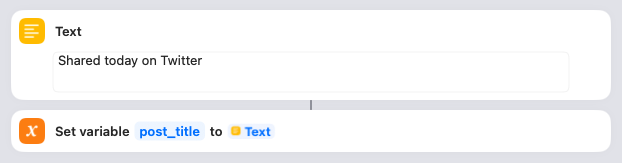

Next you need to decide what the title of each post will be. I’ve gone with “Shared today on Twitter,” but you might want to change that. The Shortcut saves that in a variable.

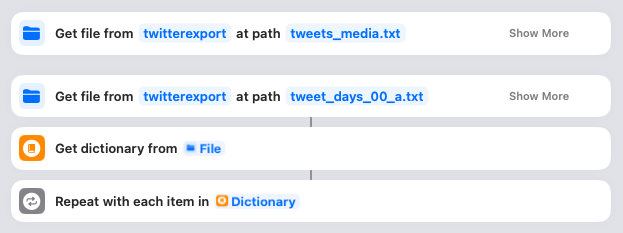

This is where it all starts to happen! You need to click on both of those “twitterexport” links and ensure they point to your downloaded Twitter archive directory. When you run the Shortcut, it’ll first look in that folder for the “tweets_media.txt” (the list of all media files) and read that into memory. Then it’ll look for the “tweet_days_00_a.txt” file and parse it, with each day becoming an item in the Dictionary. The Shortcut then loops through each of these days.

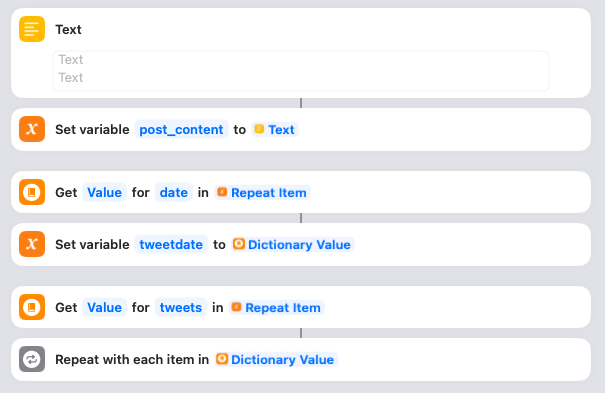

For each day, the Shortcut starts by setting a variable called post_content to blank. It also gets the date from the JSON and sets it in a variable called tweetdate. Then it grabs all the tweets for that day in preparation for looping through them. Nothing to do here.

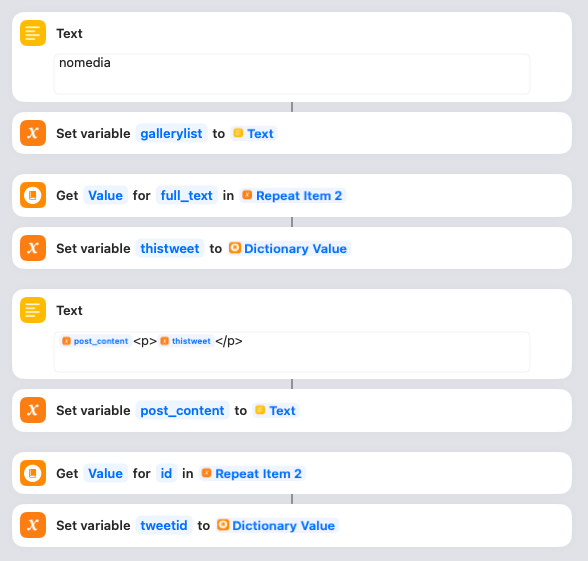

For each tweet, the Shortcut does a few things. First it sets a variable called gallerylist to “nomedia”. This variable is used to track whether there are any images for a post. Next it gets the actual text of the tweet and saves it in a variable called thistweet. Next it uses thistweet to update the post_content variable. I’ve simply wrapped each tweet in paragraph tags, but you can tweak this if you want. Lastly it gets the unique ID for the tweet and saves that in a variable called tweetid.

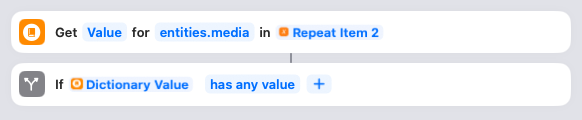

Now the Shortcut checks to see if there are any media items associated with the tweet. If not, it can skip the next section. If there are media items…

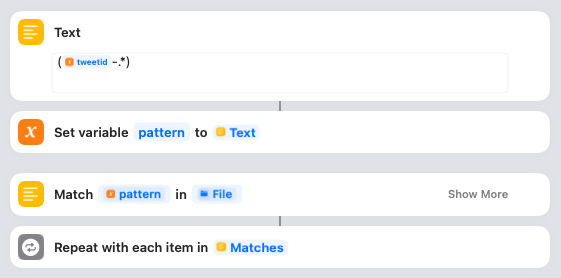

…then the Shortcut is going to search for them using regular expressions. First it defines a variable called pattern that is going to look for the tweetid followed by a hyphen and then any other characters. Then it matches that pattern to the tweets_media.txt file we read in towards to the start of the Shortcut. If it finds any matching media, it will loop through them one by one.

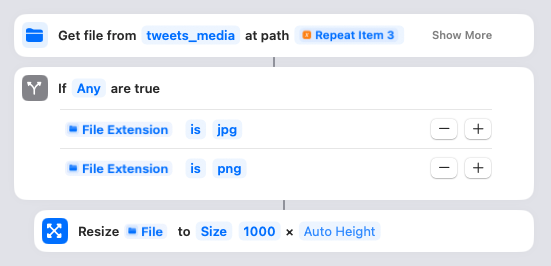

Here’s where the Shortcut actually gets the media file. The name of the file is the matched pattern from the regular expression search. You need to click on that “tweets_media” link and ensure it points to the correct folder in your Twitter archive directory. The filenames are relative to this parent, so it needs to be correct! Assuming the file is an image, it will resize it to 1000 pixels wide, maximum. (You can adjust that if you want, or take it out to leave them full-size.)

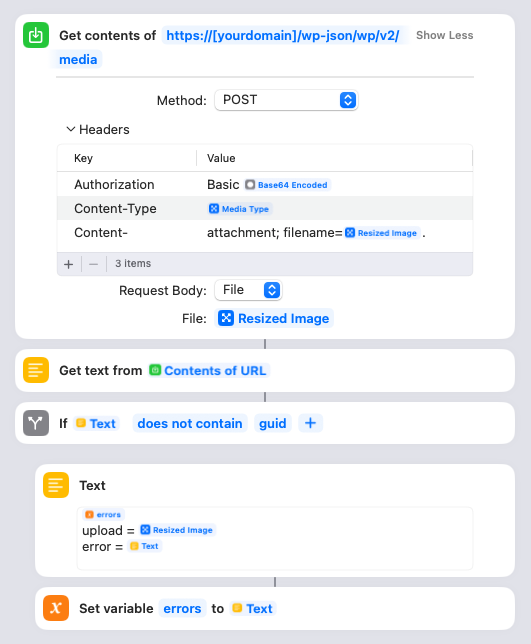

Here’s where the Shortcut actually uploads the resized media file to your site. You will need to put your own domain name into the URL there. Once the image is uploaded, the Shortcut will parse the response from your site and check if there’s an error. All successful responses should have “guid” in there, so if it’s not, it’ll save the name of the file it was trying to upload and the error message into the errors variable.

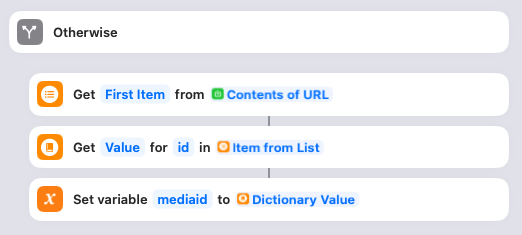

If the upload succeeded, it’ll instead save the ID of the newly uploaded image in the mediaid variable. Nothing to do here.

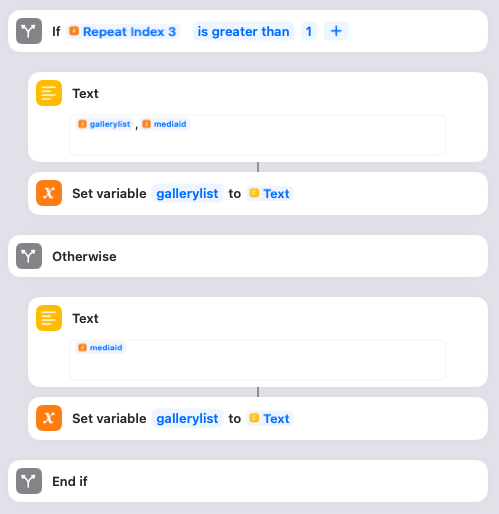

If the image is the first one attached to the tweet, the Shortcut will set the gallerylist variable to the mediaid. If there is more than one image attached to the tweet, each subsequent image will be appended with a comma separator. (You’ll see why later.) Nothing to do here.

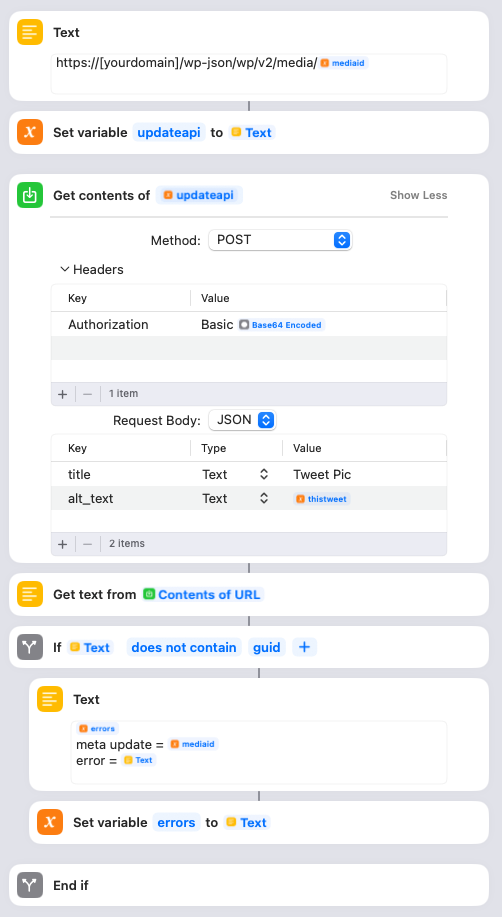

You need to update the Text box with your blog domain. This step is where the Shortcut updates the title and alt text of the image it just uploaded. The title is set to “Tweet Pic”, but you can change that to something else if you like. The alt text is set to the thistweet variable. If this update fails, the mediaid and error text are logged to the error variable.

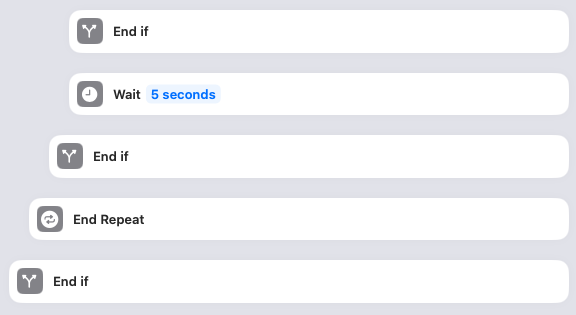

This closes the if statement checking whether the image succeeded, waits for 5 seconds, closes the if statement checking if the media item was an image, loops to the next media item in the post, and eventually closes the if statement checking whether the tweet had any media. You can remove the Wait if you want, or experiment with how long you want it to be. I added it because I didn’t want to DDoS my own site by blasting out API requests as fast as my MacPro can generate them. Otherwise nothing to do here.

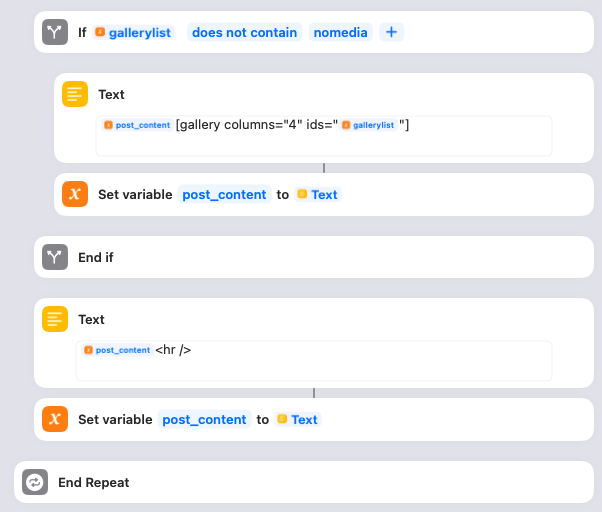

Remember, we’re still looping through each tweet. The Shortcut then checks the gallerylist variable. If gallerylist is not set to “nomedia” (because there were media items attached to the tweet), then it updates the post_content variable to append a WordPress image gallery with the list of images for the tweet. I have configured the gallery to have 4 columns of thumbnail images, but you can adjust this if you prefer something else. Then the Shortcut is finished processing this particular tweet, and it appends a horizontal rule to the post_content. Again, you can change this if you want. And then it’s time to loop to the next tweet for this day in the JSON!

(Sidenote: gallerylist gave me some difficulty at first. Initially I set it to blank and then tried to check whether it was still blank at this point, but I found that Shortcuts doesn’t have a good way of testing whether something is null. Instead it’s better to set it to something, and then check whether it’s still that.)

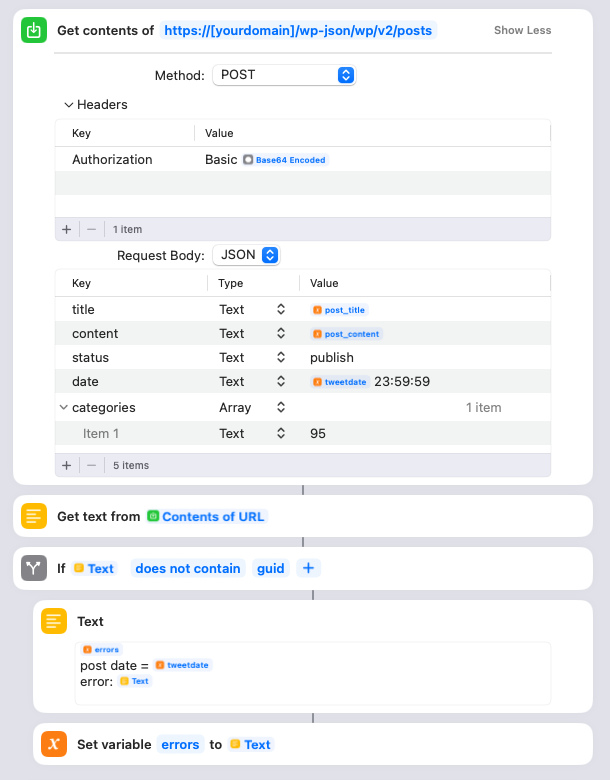

Once the Shortcut has looped through all the tweets for this particular day, it’s time to actually create the WordPress post. You need to adjust the URL to have your domain name. It uses the encoded username/password we created way up at the start. It uses the post_title variable we set at the start of the Shortcut as the title. The content is the post_content HTML that was created, and the date is the tweetdate plus the time 23:59:59. I also assign the post to a special WordPress category that I created ahead of time. You can either remove this, or change the category ID to the appropriate one from your site.

Also please note that I’ve set the status to “publish”. This means the posts will go live in WordPress right away, but since the publish date is in the past they won’t appear on your home page or in your RSS feeds.

The Shortcut will also check if there were any errors returned upon publishing the post, and if so save the post date and the error message in the errors variable.

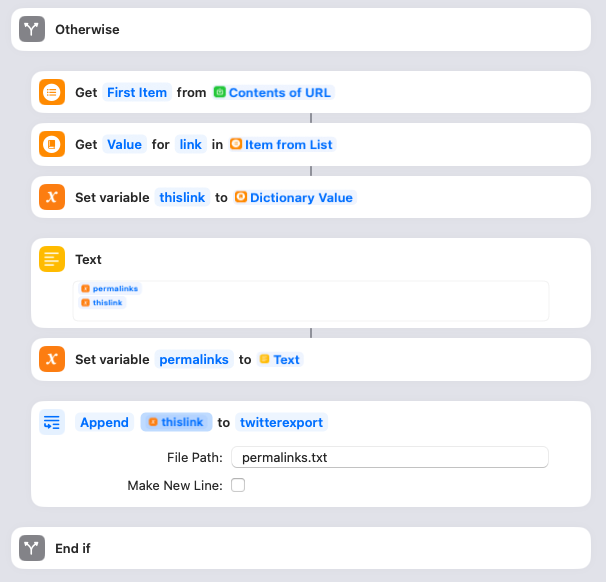

If there were no errors, the Shortcut parses the response from the WordPress API and pulls out the link to the post that’s been created. That link then gets appended to the list saved within the permalinks variable. I also append the link to the permalinks.txt file. Make sure the twitterexport directory actually points to the folder containing the permalinks.txt file!

Once the post for the day is completed, I have another “wait” set for 8 seconds to space out the loops. You can remove this, or adjust as necessary. Then the whole thing loops back around to the next day in the JSON. Once all the days are processed, the Shortcut will append the errors variable to the errors.txt file. Make sure the twitterexport directory actually points to the folder containing the errors.txt file! It’ll then click the “Lap” button on the Stopwatch so you know how long this particular import took and then click “Stop” to stop it altogether. The Shortcut will then show an alert box that says “Done!”, then one with any errors that occurred, and then another with the list of permalinks.

Step 5 – Run the Shortcut

Once I had the Shortcut properly configured, I made sure it was pointing to the first import file – tweet_days_00_a.txt – and clicked the “Play” button to start the import. Shortcuts will highlight each step as it moves through the flow. Once it finishes, I checked if there were any errors and then loaded up the category on my site to see if everything looked okay. If all was well, I updated the Shortcut to point to the next import file – tweet_days_01_1.txt – and clicked Play again… over and over and over until all 50 were complete.

One last heads up – you’ll probably get a lot of Permissions requests from your Mac at first due to the large number of files you’re transferring. I just kept clicking the option to “Always Allow” and eventually it stopped asking.

After the import…

As mentioned, I still need to figure out how to handle the 200 video files that I skipped over. I’ll probably go through them individually and see if they’re worth keeping or if they were just stupid memes and gifs.

And that’s it! Suck it, Elon!

Oh, and if you’re wondering why the stopwatch image has more than 50 laps? It’s because once or twice I accidentally started processing the same import file again and had to frantically kill Shortcuts before it duplicated all of the posts and images. 🤦♀️

-

Comments

I had a lovely email from my friend Emily tonight, who said she appreciated and approved of my stance on moving away from Facebook and Instagram as platforms, but missed the ability to comment on my updates. It’s a fair point. I’ve deliberately left comments turned off for now, as Rodd is working on a project to turn this blog (and RoaldDahlFans) into a static site. This will make the site more secure; it’ll load faster for you; and it’ll be cheaper for me to run. The trade-off is that anything interactive – like comment forms – won’t work anymore. But I know what Emily means, and I miss having that form of interactivity here.

There is an interesting option that I’m toying with – using Bluesky and/or Mastodon for the comments. I’ve seen several static blogs doing this with those networks, and it feels like something I could do. I’ve started doing my research so it may well be appearing here before you know it…

Updated to add: You know what? Let’s try turning comments back on and see what happens…